52896WA Advanced Diploma of Civil and Structural Engineering (Materials Testing)

Investigation of the properties of construction materials, the principles which…Read moreGraduate Diploma of Engineering (Safety, Risk and Reliability)

The Graduate Diploma of Engineering (Safety, Risk and Reliability) program…Read moreProfessional Certificate of Competency in Fundamentals of Electric Vehicles

Learn the fundamentals of building an electric vehicle, the components…Read moreProfessional Certificate of Competency in 5G Technology and Services

Learn 5G network applications and uses, network overview and new…Read moreProfessional Certificate of Competency in Clean Fuel Technology - Ultra Low Sulphur Fuels

Learn the fundamentals of Clean Fuel Technology - Ultra Low…Read moreProfessional Certificate of Competency in Battery Energy Storage and Applications

Through a scientific and practical approach, the Battery Energy Storage…Read more52910WA Graduate Certificate in Hydrogen Engineering and Management

Hydrogen has become a significant player in energy production and…Read moreProfessional Certificate of Competency in Hydrogen Powered Vehicles

This course is designed for engineers and professionals who are…Read more

This manual outlines the best practice in designing, installing, commissioning and troubleshooting industrial data communications systems.

Back to Basics

Web Site: www.idc-online.com

E-mail: idc@idc-online.com

Copyright

All rights to this publication, associated software and workshop are reserved. No part of this publication or associated software may be copied, reproduced, transmitted or stored in any form or by any means (including electronic, mechanical, photocopying, recording or otherwise) without prior written permission of IDC Technologies.

Disclaimer

Whilst all reasonable care has been taken to ensure that the descriptions, opinions, programs, listings, software and diagrams are accurate and workable, IDC Technologies do not accept any legal responsibility or liability to any person, organization or other entity for any direct loss, consequential loss or damage, however caused, that may be suffered as a result of the use of this publication or the associated workshop and software.

In case of any uncertainty, we recommend that you contact IDC Technologies for clarification or assistance.

Trademarks

All terms noted in this publication that are believed to be registered trademarks or trademarks are listed below:

IBM, XT and AT are registered trademarks of International Business Machines Corporation. Microsoft, MS-DOS and Windows are registered trademarks of Microsoft Corporation.

Acknowledgements

IDC Technologies expresses its sincere thanks to all those engineers and technicians on our training workshops who freely made available their expertise in preparing this manual.

Who is IDC Technologies?

IDC Technologies is a specialist in the field of industrial communications, telecommunications, automation and control and has been providing high quality training for more than six years on an international basis from offices around the world.

IDC consists of an enthusiastic team of professional engineers and support staff who are committed to providing the highest quality in their consulting and training services.

The Benefits to you of Technical Training Today

The technological world today presents tremendous challenges to engineers, scientists and technicians in keeping up to date and taking advantage of the latest developments in the key technology areas.

- The immediate benefits of attending IDC workshops are:

- Gain practical hands-on experience

- Enhance your expertise and credibility

- Save $$$s for your company

- Obtain state of the art knowledge for your company

- Learn new approaches to troubleshooting

- Improve your future career prospects

The IDC Approach to Training

All workshops have been carefully structured to ensure that attendees gain maximum benefits. A combination of carefully designed training software, hardware and well written documentation, together with multimedia techniques ensure that the workshops are presented in an interesting, stimulating and logical fashion.

IDC has structured a number of workshops to cover the major areas of technology. These courses are presented by instructors who are experts in their fields, and have been attended by thousands of engineers, technicians and scientists world-wide (over 11,000 in the past two years), who have given excellent reviews. The IDC team of professional engineers is constantly reviewing the courses and talking to industry leaders in these fields, thus keeping the workshops topical and up to date.

Technical Training Workshops

IDC is continually developing high quality state of the art workshops aimed at assisting engineers, technicians and scientists. Current workshops include:

Instrumentation & Control

- Practical Automation and Process Control using PLC’s

- Practical Data Acquisition using Personal Computers and Standalone Systems

- Practical On-line Analytical Instrumentation for Engineers and Technicians

- Practical Flow Measurement for Engineers and Technicians

- Practical Intrinsic Safety for Engineers and Technicians

- Practical Safety Instrumentation and Shut-down Systems for Industry

- Practical Process Control for Engineers and Technicians

- Practical Programming for Industrial Control – using (IEC 1131-3;OPC)

- Practical SCADA Systems for Industry

- Practical Boiler Control and Instrumentation for Engineers and Technicians

- Practical Process Instrumentation for Engineers and Technicians

- Practical Motion Control for Engineers and Technicians

- Practical Communications, SCADA & PLC’s for Managers

Communications

- Practical Data Communications for Engineers and Technicians

- Practical Essentials of SNMP Network Management

- Practical Field Bus and Device Networks for Engineers and Technicians

- Practical Industrial Communication Protocols

- Practical Fibre Optics for Engineers and Technicians

- Practical Industrial Networking for Engineers and Technicians

- Practical TCP/IP & Ethernet Networking for Industry

- Practical Telecommunications for Engineers and Technicians

- Practical Radio & Telemetry Systems for Industry

- Practical Local Area Networks for Engineers and Technicians

- Practical Mobile Radio Systems for Industry

Electrical

- Practical Power Systems Protection for Engineers and Technicians

- Practical High Voltage Safety Operating Procedures for Engineers & Technicians

- Practical Solutions to Power Quality Problems for Engineers and Technicians

- Practical Communications and Automation for Electrical Networks

- Practical Power Distribution

- Practical Variable Speed Drives for Instrumentation and Control Systems

Project & Financial Management

- Practical Project Management for Engineers and Technicians

- Practical Financial Management and Project Investment Analysis

- How to Manage Consultants

Mechanical Engineering

- Practical Boiler Plant Operation and Management for Engineers and Technicians

- Practical Centrifugal Pumps – Efficient use for Safety & Reliability

Electronics

- Practical Digital Signal Processing Systems for Engineers and Technicians

- Practical Industrial Electronics Workshop

- Practical Image Processing and Applications

- Practical EMC and EMI Control for Engineers and Technicians

Information Technology

- Personal Computer & Network Security (Protect from Hackers, Crackers & Viruses)

- Practical Guide to MCSE Certification

- Practical Application Development for Web Based SCADA

Comprehensive Training Materials

Workshop Documentation

All IDC workshops are fully documented with complete reference materials including comprehensive manuals and practical reference guides.

Software

Relevant software is supplied with most workshop. The software consists of demonstration programs which illustrate the basic theory as well as the more difficult concepts of the workshop.

Hands-On Approach to Training

The IDC engineers have developed the workshops based on the practical consulting expertise that has been built up over the years in various specialist areas. The objective of training today is to gain knowledge and experience in the latest developments in technology through cost effective methods. The investment in training made by companies and individuals is growing each year as the need to keep topical and up to date in the industry which they are operating is recognized. As a result, the IDC instructors place particular emphasis on the practical hands-on aspect of the workshops presented.

On-Site Workshops

In addition to the quality of workshops which IDC presents on a world-wide basis, all IDC courses are also available for on-site (in-house) presentation at our clients premises.On-site training is a cost effective method of training for companies with many delegates to train in a particular area. Organizations can save valuable training $$$’s by holding courses on-site, where costs are significantly less. Other benefits are IDC’s ability to focus on particular systems and equipment so that attendees obtain only the greatest benefits from the training.

All on-site workshops are tailored to meet with clients training requirements and courses can be presented at beginners, intermediate or advanced levels based on the knowledge and experience of delegates in attendance. Specific areas of interest to the client can also be covered in more detail. Our external workshops are planned well in advance and you should contact us as early as possible if you require on-site/customized training. While we will always endeavor to meet your timetable preferences, two to three month’s notice is preferable in order to successfully fulfil your requirements. Please don’t hesitate to contact us if you would like to discuss your training needs.

Customized Training

In addition to standard on-site training, IDC specializes in customized courses to meet client training specifications. IDC has the necessary engineering and training expertise and resources to work closely with clients in preparing and presenting specialized courses.

These courses may comprise a combination of all IDC courses along with additional topics and subjects that are required. The benefits to companies in using training is reflected in the increased efficiency of their operations and equipment.

Training Contracts

IDC also specializes in establishing training contracts with companies who require ongoing training for their employees. These contracts can be established over a given period of time and special fees are negotiated with clients based on their requirements. Where possible IDC will also adapt courses to satisfy your training budget.

References from various international companies to whom IDC is contracted to provide on-going technical training are available on request.

Some of the thousands of Companies worldwide that have supported and benefited from IDC workshops are:

Alcoa, Allen-Bradley, Altona Petrochemical, Aluminum Company of America, AMC Mineral Sands, Amgen, Arco Oil and Gas, Argyle Diamond Mine, Associated Pulp and Paper Mill, Bailey Controls, Bechtel, BHP Engineering, Caltex Refining, Canon, Chevron, Coca-Cola, Colgate-Palmolive, Conoco Inc, Dow Chemical, ESKOM, Exxon, Ford, Gillette Company, Honda, Honeywell, Kodak, Lever Brothers, McDonnell Douglas, Mobil, Modicon, Monsanto, Motorola, Nabisco, NASA, National Instruments, National Semi-Conductor, Omron Electric, Pacific Power, Pirelli Cables, Proctor and Gamble, Robert Bosch Corp, Siemens, Smith Kline Beecham, Square D, Texaco, Varian, Warner Lambert, Woodside Offshore Petroleum, Zener Electric

Contents

Preface xi

1 Overview 1

1.1 Introduction 1

1.2 Historical background 2

1.3 Standards 3

1.4 Open systems interconnection (OSI) model 3

1.5 Protocols 4

1.6 Physical standards 5

1.7 Modern instrumentation and control systems 6

1.8 Distributed control systems (DCSs) 7

1.9 Programmable logic controllers (PLCs) 7

1.10 Impact of the microprocessor 8

1.11 Smart instrumentation systems 9

2 Basic principles 11

2.1 Bits, bytes and characters 12

2.2 Communication principles 13

2.3 Communication modes 13

2.4 Asynchronous systems 14

2.5 Synchronous systems 15

2.6 Error detection 16

2.7 Transmission characteristics 17

2.8 Data coding 18

2.9 The universal asynchronous receiver/transmitter (UART) 29

2.10 The high speed UART (16550) 34

3 Serial communication standards 35

3.1 Standards organizations 36

3.2 Serial data communications interface standards 38

3.3 Balanced and unbalanced transmission lines 38

3.4 EIA-232 interface standard (CCITT V.24 interface standard) 40

3.5 Troubleshooting serial data communication circuits 53

3.6 Test equipment 54

3.7 RS-449 interface standard (November 1977) 58

3.8 RS-423 interface standard 58

3.9 The RS-422 interface standard 59

3.10 The RS-485 interface standard 62

3.11 Troubleshooting and testing with RS-485 67

3.12 RS/TIA-530A interface standard (May 1992) 68

3.13 RS/TIA-562 interface standard (June 1992) 68

3.14 Comparison of the EIA interface standards 69

3.15 The 20 mA current loop 70

3.16 Serial interface converters 71

3.17 Interface to serial printers 73

3.18 Parallel data communications interface standards 74

3.19 General purpose interface bus (GPIB) or IEEE-488 or IEC-625 74

3.20 The Centronics interface standard 80

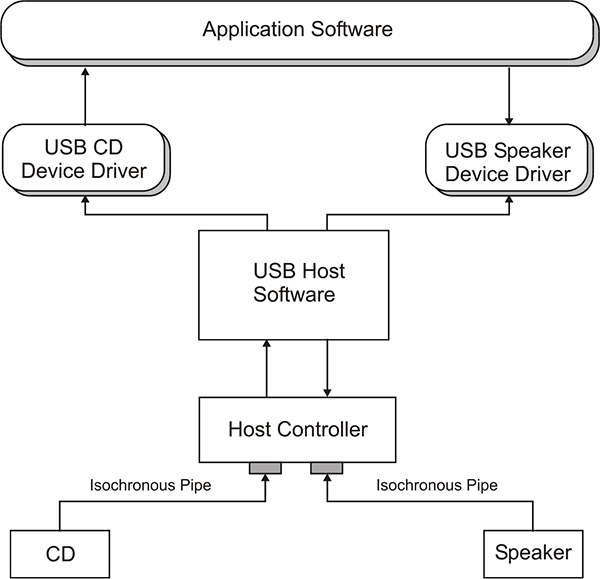

3.21 The universal serial bus (USB) 82

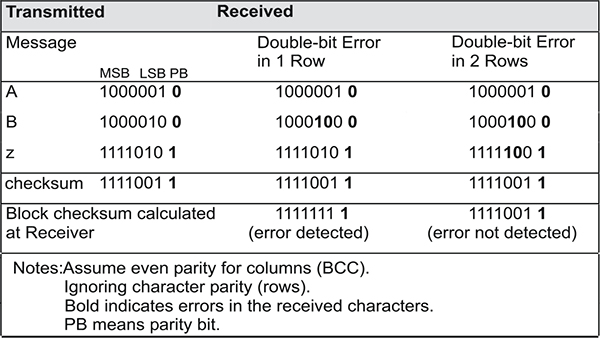

4 Error detection 102

4.1 Origin of errors 102

4.2 Factors affecting signal propagation 103

4.3 Types of error detection, control and correction 104

4.4 Other control mechanisms 111

5 Cabling basics 112

5.1 Overview 112

5.2 Copper-based cables 113

5.3 Twisted pair cables 114

5.4 Coaxial cables 116

5.5 Fiber-optic cables 116

6 Electrical noise and interference 124

6.1 Definition of noise 124

6.2 Frequency analysis of noise 126

6.3 Sources of electrical noise 131

6.4 Electrical coupling of noise 131

6.5 Shielding 138

6.6 Good shielding performance ratios 139

6.7 Cable ducting or raceways 139

6.8 Cable spacing 139

6.9 Earthing and grounding requirements 140

6.10 Suppression techniques 142

6.11 Filtering 144

7 Modems and multiplexers 145

7.1 Introduction 146

7.2 Modes of operation 147

7.3 Synchronous or asynchronous 147

7.4 Interchange circuits 149

7.5 Flow control 149

7.6 Distortion 150

7.7 Modulation techniques 152

7.8 Components of a modem 156

7.9 Types of modem 158

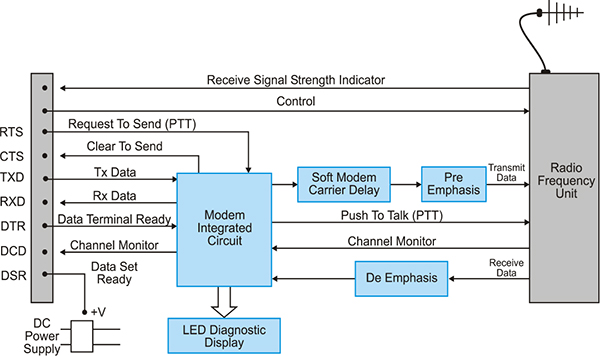

7.10 Radio modems 162

7.11 Error detection/correction 167

7.12 Data compression techniques 170

7.13 Modem standards 174

7.14 Troubleshooting a system using modems 176

7.15 Selection considerations 178

7.16 Multiplexing concepts 180

7.17 Terminal multiplexers 184

7.18 Statistical multiplexers 185

8 Introduction to protocols 186

8.1 Flow control protocols 187

8.2 XON/OFF 187

8.3 Binary synchronous protocol 187

8.4 HDLC and SDLC protocols 190

8.5 File transfer protocols 193

9 Open systems interconnection model 199

9.1 Data communications for instrumentation and control 199

9.2 Individual OSI layers 201

9.3 OSI analogy 202

9.4 An example of an industrial control application 203

9.5 Simplified OSI model 204

10 Industrial protocols 205

10.1 Introduction 205

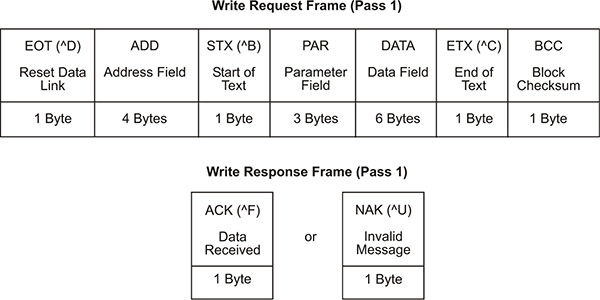

10.2 ASCII based protocols 206

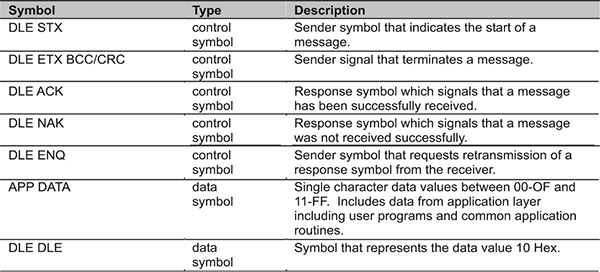

10.3 ASCII based protocol ANSI-X3.28-2.5-A4 210

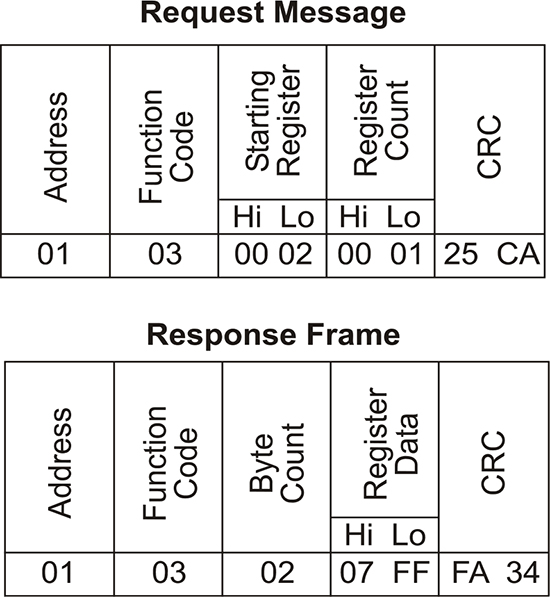

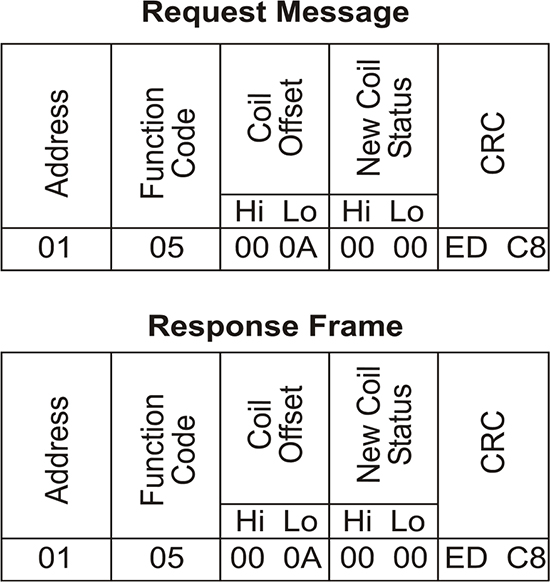

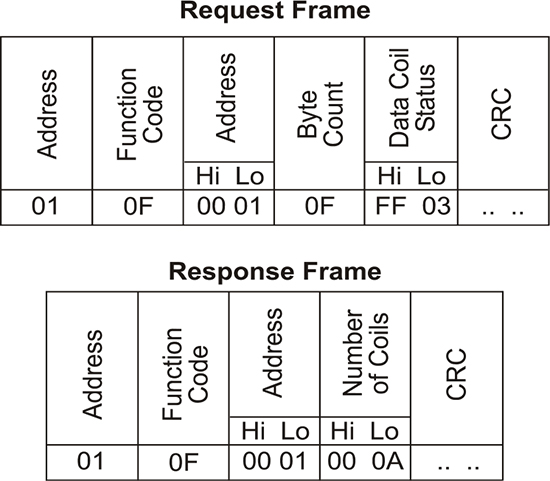

10.4 Modbus protocol 214

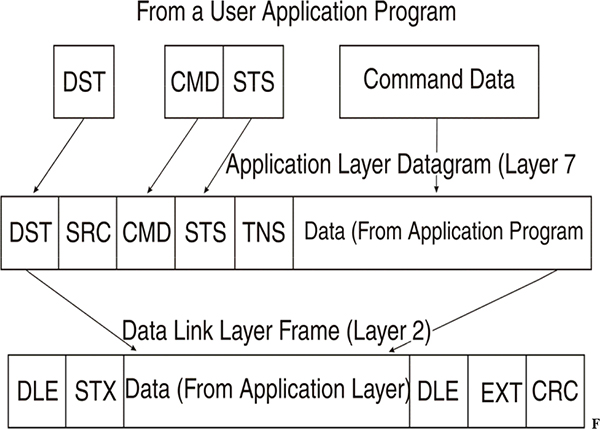

10.5 Allen Bradley Data Highway (Plus) protocol 229

11 HART protocol 239

11.1 Introduction to HART and smart instrumentation 239

11.2 Highway addressable remote transducer (HART) 240

11.3 Physical layer 241

11.4 Data link layer 243

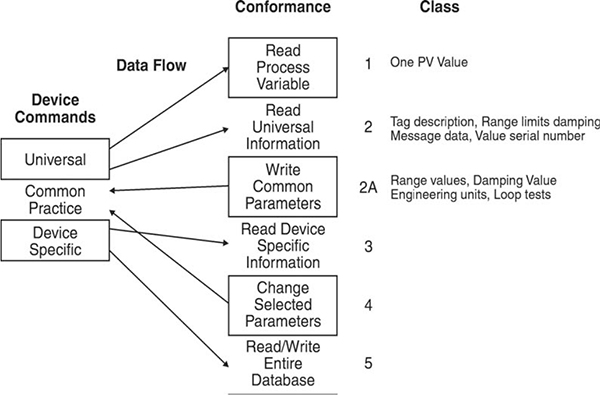

11.5 Application layer 244

11.6 Typical specification for a Rosemount transmitter 246

12 Open industrial Fieldbus and DeviceNet systems 248

12.1 Introduction 248

12.2 Overview 249

12.3 Actuator sensor interface (AS-i) 255

12.4 Seriplex 262

12.5 CANbus, DeviceNet and SDS systems 265

12.6 Interbus-S 271

12.7 Profibus 274

12.8 Factory information bus (FIP) 280

12.9 WorldFip 282

12.10 Foundation Fieldbus 283

13 Local area networks (LANs) 291

13.1 Overview 292

13.2 Circuit and packet switching 292

13.3 Network topologies 293

13.4 Media access control mechanisms 295

13.5 Transmission techniques 297

13.6 Summary of LAN standards 298

13.7 Ethernet 299

13.8 Medium access control 301

13.9 Ethernet protocol operation 302

13.10 Ethernet hardware requirements 305

13.11 Ethernet performance predictions 308

13.12 Reducing collisions 309

13.13 Fast Ethernet 310

13.14 Token ring 310

13.15 Token bus 313

13.16 Token bus protocol operations 314

13.17 Internetwork connections 317

13.18 Network operating systems 320

13.19 Network architectures and protocols 321

13.20 NOS products 323

Appendix A Numbering systems 325

Appendix B Cyclic redundancy check (CRC) program listing 331

Appendix C Serial link design 334

Appendix D Glossary 358

Appendix E 381

Index

The challenge for the engineer and technician today is to make effective use of modern instrumentation and control systems and ‘smart’ instruments. This is achieved by linking equipment such as PCs, programmable logic controllers (PLCs), SCADA and distributed control systems, and simple instruments together with data communications systems that are correctly designed and implemented. In other words: to fully utilize available technology.

Practical Data Communications for Instrumentation and Control is a comprehensive book covering industrial data communications including RS-232, RS-422, RS-485, industrial protocols, industrial networks, and communication requirements for ‘smart’ instrumentation.

Once you have studied this book, you will be able to analyze, specify, and debug data communications systems in the instrumentation and control environment, with much of the material presented being derived from many years of experience of the authors. It is especially suited to those who work in an industrial environment and who have little previous experience in data communications and networking.

Typical people who will find this book useful include:

- Instrumentation and control engineers and technicians

- Process control engineers and technicians

- Electrical engineers

- Consulting engineers

- Process development engineers

- Design engineers

- Control systems sales engineers

- Maintenance supervisors

We would hope that you will gain the following from this book:

- The fundamentals of industrial data communications

- How to troubleshoot RS-232 and RS-485 links

- How to install communications cables

- The essentials of industrial Ethernet and local area networks

- How to troubleshoot industrial protocols such as Modbus

- The essentials of Fieldbus and DeviceNet standards

You should have a modicum of electrical knowledge and some exposure to industrial automation systems to derive maximum benefit from this book.

Why do we use RS-232, RS-422, RS-485 ?

One is often criticized for using these terms of reference, since in reality they are obsolete. However, if we briefly examine the history of the organization that defined these standards, it is not difficult to see why they are still in use today, and will probably continue as such.

The common serial interface RS-232 was defined by the Electronics Industry Association (EIA) of America. ‘RS’ stands for Recommended Standards, and the number (suffix -232) refers to the interface specification of the physical device. The EIA has since established many standards and amassed a library of white papers on various implementations of them. So to keep track of them all it made sense to change the prefix to EIA. (You might find it interesting to know that most of the white papers are NOT free).

The Telecommunications Industry Association (TIA) was formed in 1988, by merging the telecom arms of the EIA and the United States Telecommunications Suppliers Association. The prefix changed again to EIA/TIA-232, (along with all the other serial implementations of course). So now we have TIA-232, TIA-485 etc.

We should also point out that the TIA is a member of the Electronics Industries Alliance (EIA). The alliance is made up of several trade organizations (including the CEA, ECA, GEIA…) that represent the interests of manufacturers of electronics-related products. When someone refers to ‘EIA’ they are talking about the Alliance, not the Association!

If we still use the terms EIA-232, EIA-422 etc, then they are just as equally obsolete as the ‘RS’ equivalents. However, when they are referred to as TIA standards some people might give you a quizzical look and ask you to explain yourself… So to cut a long story short, one says ‘RS-xxx’ and the penny drops.

In the book you are about to read, the authors have painstakingly altered all references for serial interfaces to ‘RS-xxx’, after being told to change them BACK from ‘EIA-xxx’! So from now on, we will continue to use the former terminology. This is a sensible idea, and we trust we are all in agreement!

Why do we use DB-25, DB-9, DB-xx ?

Originally developed by Cannon for military use, the D-sub(miniature) connectors are so-called because the shape of the housing’s mating face is like a ‘D’. The connectors have 9-, 15-, 25-, 37- and 50-pin configurations, designated DE-9, DA-15, DB-25, DC-37 and DD-50, respectively. Probably the most common connector in the early days was the 25-pin configuration (which has been around for about 40 years), because it permitted use of all available wiring options for the RS-232 interface.

It was expected that RS-232 might be used for synchronous data communications, requiring a timing signal, and thus the extra pin-outs. However this is rarely used in practice, so the smaller 9-position connectors have taken its place as the dominant configuration (for asynchronous serial communications).

Also available in the standard D-sub configurations are a series of high density options with 15-, 26-, 44-, and 62-pin positions. (Possibly there are more, and are usually variations on the original A,B,C,D, or E connector sizes). It is common practice for electronics manufacturers to denote all D-sub connectors with the DB- prefix… particularly for producers of components or board-level products and cables. This has spawned generations of electronics enthusiasts and corporations alike, who refer to the humble D-sub or ‘D Connector’ in this fashion. It is for this reason alone that we continue the trend for the benefit of the majority who are so familiar with the ‘DB’ terminology.

The structure of the book is as follows.

Chapter 1: Overview. This chapter gives a brief overview of what is covered in the book with an outline of the essentials and a historical background to industrial data communications.

Chapter 2: Basic principles. The aim of this chapter is to lay the groundwork for the more detailed information presented in the following chapters.

Chapter 3: Serial communication standards. This chapter discusses the main physical interface standards associated with data communications for instrumentation and control systems.

Chapter 4: Error detection. This chapter looks at how errors are produced and the types of error detection, control, and correction available.

Chapter 5: Cabling basics. This chapter discusses the issues in obtaining the best performance from a communication cable by selecting the correct type and size.

Chapter 6: Electrical noise and interference. This chapter examines the various categories of electrical noise and where each of the various noise reduction techniques applies.

Chapter 7: Modems and multiplexers. This chapter reviews the concepts of modems and multiplexers, their practical use, position and importance in the operation of a data communication system.

Chapter 8: Introduction to protocols. This chapter discusses the concept of a protocol which is defined as a set of rules governing the exchange of data between a transmitter and receiver over a communications link or network.

Chapter 9: Open systems interconnection model. The purpose of the Open Systems Interconnection reference model is to provide a common basis for the development of systems interconnection standards. An open system is a system that conforms to specifications and guidelines, which are ‘open’ to all.

Chapter 10: Industrial protocols. This chapter focusses on the software aspects of protocols (as opposed to the physical aspects which are covered in earlier chapters).

Chapter 11: HART protocol. The Highway Addressable Remote Transducer (HART) protocol is one of a number of smart instrumentation protocols designed for collecting data from instruments, sensors and actuators by digital communication techniques. This chapter examines this in some depth.

Chapter 12: Open industrial Fieldbus and DeviceNet systems. This chapter examines the different Fieldbus and DeviceNet systems on the market with an emphasis on ASI Bus, CanBus and DeviceNet, Interbus-S, Profibus and Foundation Fieldbus.

Chapter 13: Local area networks (LANs). This chapter focuses on networks generally used in industrial data communications with an emphasis on Ethernet.

This chapter introduces data communications, and provides a historical background. It discusses the need for standards in the data communications industry in terms of the physical transfer of information and the way in which data is handled. Finally, it takes a brief look at data communications as they apply to instrumentation and control systems.

Objectives

When you have completed studying this chapter you will be able to:

- Describe the basic principles of all communication systems

- Describe the historical background and evolution of data communications

- Explain the role of standards and protocols

- Describe the OSI model of communication layers

- Describe four important physical standards

- Explain the purpose of instrumentation and control system

- Describe the four most important control devices:

- – DCS

- – PLCs

- – Smart instruments

- – PCs

1.1 Introduction

Data communications is the transfer of information from one point to another. In this book, we are specifically concerned with digital data communication. In this context, ‘data’ refers to information that is represented by a sequence of zeros and ones; the same sort of data that is handled by computers. Many communications systems handle analog data; examples are the telephone system, radio, and television. Modern instrumentation is almost wholly concerned with the transfer of digital data.

Any communications system requires a transmitter to send information, a receiver to accept it and a link between the two. Types of link include copper wire, optical fiber, radio, and microwave.

Some short distance links use parallel connections; meaning that several wires are required to carry a signal. This sort of connection is confined to devices such as local printers. Virtually all modern data communication use serial links, in which the data is transmitted in sequence over a single circuit.

The digital data is sometimes transferred using a system that is primarily designed for analog communication. A modem, for example, works by using a digital data stream to modulate an analog signal that is sent over a telephone line. At the receiving end, another modem demodulates the signal to reproduce the original digital data. The word ‘modem’ comes from modulator and demodulator.

There must be mutual agreement on how data is to be encoded, that is, the receiver must be able to understand what the transmitter is sending. The structure in which devices communicate is known as a protocol.

In the past decade many standards and protocols have been established which allow data communications technology to be used more effectively in industry. Designers and users are beginning to realize the tremendous economic and productivity gains possible with the integration of discrete systems that are already in operation.

1.2 Historical background

Although there were many early systems (such as the French chain of semaphore stations) data communications in its modern electronic form started with the invention of the telegraph. The first systems used several parallel wires, but it soon became obvious that for long distances a serial method, over a single pair of wires, was the most economical.

The first practical telegraph system is generally attributed to Samuel Morse. At each end of a link, there was an operator with a sending key and sounder. A message was sent as an encoded series of ‘dots’ (short pulses) and ‘dashes’ (longer pulses). This became known as the Morse code and comprised of about 40 characters including the complete alphabet, numbers, and some punctuation. In operation, a sender would first transmit a starting sequence, which would be acknowledged by a receiver. The sender would then transmit the message and wait for a final acknowledgment. Signals could only be transmitted in one direction at a time.

Manual encoding and decoding limited transmission speeds and attempts were soon made to automate the process. The first development was ‘teleprinting’ in which the dots and dashes were recorded directly onto a rotating drum and could be decoded later by the operator.

The next stage was a machine that could decode the signal and print the actual characters by means of a wheel carrying the typefaces. Although this system persisted for many years, it suffered from synchronization problems.

Perhaps the most severe limitation of Morse code is its use of a variable number of elements to represent the different characters. This can vary from a single dot or dash, to up to six dots and/or dashes, and made it unsuitable for an automated system. An alternative ‘code’ was invented, in the late 1800s, by the French telegraphic engineer Maurice Emile Baudot. The Baudot code was the first uniform-length binary code. Each character was represented by a standard 5-bit character size. It encoded 32 (25) characters, which included all the letters of the alphabet, but no numerals.

The International Telecommunications Union (ITU) later adopted the code as the standard for telegraph communications and incorporated a ‘shift’ function to accommodate a further set of 32 characters. The term ‘baud’ was coined in Baudot’s honor and used to indicate the rate at which a signal changes state. For example, 100 baud means 100 possible signal changes per second.

The telegraph system used electromechanical devices at each end of a link to encode and decode a message. Later machines allowed a user to encode a message off-line onto punched paper tape, and then transmit the message automatically via a tape reader. At the receiving end, an electric typewriter mechanism printed the text. Facsimile transmission using computer technology, more sophisticated encoding and communications systems, has almost replaced telegraph transmissions.

The steady evolution of data communications has led to the modern era of very high speed systems, built on the sound theoretical and practical foundations established by the early pioneers.

1.3 Standards

Protocols are the structures used within a communications system so that, for example, a computer can talk to a printer. Traditionally, developers of software and hardware platforms have developed protocols, which only their products can use. In order to develop more integrated instrumentation and control systems, standardization of these communication protocols is required.

Standards may evolve from the wide use of one manufacturer’s protocol (a de facto standard) or may be specifically developed by bodies that represent an industry. Standards allow manufacturers to develop products that will communicate with equipment already in use, which for the customer simplifies the integration of products from different sources.

1.4 Open systems interconnection (OSI) model

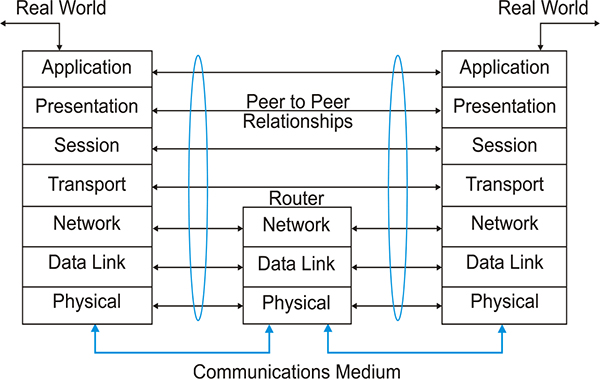

The OSI model, developed by the International Standards Organization (ISO), is rapidly gaining industry support. The OSI model reduces every design and communication problem into a number of layers as shown in Figure 1.1. A physical interface standard such as RS-232 would fit into the ‘physical layer’, while the other layers relate to various other protocols.

Representation of the OSI model

Messages or data are generally sent in packets, which are simply a sequence of bytes. The protocol defines the length of the packet, which is usually fixed. Each packet requires a source address and a destination address so that the system knows where to send it, and the receiver knows where it came from. A packet starts at the top of the protocol stack, the application layer, and passes down through the other software layers until it reaches the physical layer. It is then sent over the link. When traveling down the stack, the packet acquires additional header information at each layer. This tells the next layer down what to do with the packet. At the receiver end, the packet travels up the stack with each piece of header information being stripped off on the way. The application layer only receives the data sent by the application layer at the transmitter.

The arrows between layers in Figure 1.1 indicate that each layer reads the packet as coming from, or going to, the corresponding layer at the opposite end. This is known as peer-to-peer communication, although the actual packet is transported via the physical link. The middle stack in this particular case (representing a router) has only the three lower layers, which is all that is required for the correct transmission of a packet between two devices.

The OSI model is useful in providing a universal framework for all communication systems. However, it does not define the actual protocol to be used at each layer. It is anticipated that groups of manufacturers in different areas of industry will collaborate to define software and hardware standards appropriate to their particular industry. Those seeking an overall framework for their specific communications requirements have enthusiastically embraced the OSI model and used it as a basis for their industry specific standards, such as Fieldbus and HART.

Full market acceptance of these standards has been slow due to uncertainty about widespread acceptance of a particular standard, additional upfront cost to implement the standard, and concern about adequate support and training to maintain the systems.

1.5 Protocols

As previously mentioned, the OSI model provides a framework within which a specific protocol may be defined. A frame (packet) might consist of the following. The first byte can be a string of 1s and 0s to synchronize the receiver or flags to indicate the start of the frame (for use by the receiver). The second byte could contain the destination address detailing where the message is going. The third byte could contain the source address noting where the message originated. The bytes in the middle of the message could be the actual data that has to be sent from transmitter to receiver. The final byte(s) are end-of-frame indicators, which can be error detection codes and/or ending flags.

Basic structure of an information frame defined by a protocol

Protocols vary from the very simple (such as ASCII based protocols) to the very sophisticated, which operate at high speeds transferring megabits of data per second. There is no right or wrong protocol; the choice depends on the particular application.

1.6 Physical standards

RS-232 interface standard

The RS-232C interface standard was issued in the USA in 1969 to define the electrical and mechanical details of the interface between data terminal equipment (DTE) and data communications equipment (DCE) which employ serial binary data interchange.

In serial Data Communications the communications system might consist of:

- The DTE, a data sending terminal such as a computer, which is the source of the data (usually a series of characters coded into a suitable digital form)

- The DCE, which acts as a data converter (such as a modem) to convert the signal into a form suitable for the communications link e.g. analog signals for the telephone system

- The communications link itself, for example, a telephone system

- A suitable receiver, such as a modem, also a DCE, which converts the analog signal back to a form suitable for the receiving terminal

- A data receiving terminal, such as a printer, also a DTE, which receives the digital pulses for decoding back into a series of characters

Figure 1.3 illustrates the signal flows across a simple serial data communications link.

A typical serial data communications link

The RS-232C interface standard describes the interface between a terminal (DTE) and a modem (DCE) specifically for the transfer of serial binary digits. It leaves a lot of flexibility to the designers of the hardware and software protocols. With the passage of time, this interface standard has been adapted for use with numerous other types of equipment such as personal computers (PCs), printers, programmable controllers, programmable logic controllers (PLCs), instruments and so on. To recognize these additional applications, the latest version of the standard, RS-232E has expanded the meaning of the acronym DCE from ‘data communications equipment’ to the more general ‘data circuit-terminating equipment”.

RS-232 has a number of inherent weaknesses that make it unsuitable for data communications for instrumentation and control in an industrial environment. Consequently, other RS interface standards have been developed to overcome some of these limitations. The most commonly used among them for instrumentation and control systems are RS-423, RS-422 and RS-485. These will be described in more detail in Chapter 3.

RS-423 interface standard

The RS-423 interface standard is an unbalanced system similar to RS-232 with increased range and data transfer rates and up to 10 line receivers per line driver.

RS-422 interface standard

The RS-422 interface system is a balanced system with the same range as RS-423, with increased data rates and up to 10 line receivers per line driver.

RS-485 interface standard

The RS-485 is a balanced system with the same range as RS-422, but with increased data rates and up to 32 transmitters and receivers possible per line.

The RS-485 interface standard is very useful for instrumentation and control systems where several instruments or controllers may be connected together on the same multi-point network.

1.7 Modern instrumentation and control systems

In an instrumentation and control system, data is acquired by measuring instruments and is transmitted to a controller – typically a computer. The controller then transmits data (or control signals) to control devices, which act upon a given process.

Integration of a system enables data to be transferred quickly and effectively between different systems in a plant along a data communications link. This eliminates the need for expensive and unwieldy wiring looms and termination points.

Productivity and quality are the principal objectives in the efficient management of any production activity. Management can be substantially improved by the availability of accurate and timely data. From this we can surmise that a good instrumentation and control system can facilitate both quality and productivity.

The main purpose of an instrumentation and control system, in an industrial environment, is to provide the following:

- Control of the processes and alarms

Traditionally, control of processes, such as temperature and flow, was provided by analog controllers operating on standard 4–20 mA loops. The 4–20 mA standard is utilized by equipment from a wide variety of suppliers. It is common for equipment from various sources to be mixed in the same control system. Stand-alone controllers and instruments have largely been replaced by integrated systems such as distributed control systems (DCS), described below. - Control of sequencing, interlocking and alarms

Typically, this was provided by relays, timers and other components hardwired into control panels and motor control centers. The sequence control, interlocking and alarm requirements have largely been replaced by PLCs, described in section 1.9. - An operator interface for display and control

Traditionally, process and manufacturing plants were operated from local control panels by several operators, each responsible for a portion of the overall process. Modern control systems tend to use a central control room to monitor the entire plant. The control room is equipped with computer based operator workstations which gather data from the field instrumentation and use it for graphical display, to control processes, to monitor alarms, to control sequencing and for interlocking. - Management information

Management information was traditionally provided by taking readings from meters, chart recorders, counters, and transducers and from samples taken from the production process. This data is required to monitor the overall performance of a plant or process and to provide the data necessary to manage the process. Data acquisition is now integrated into the overall control system. This eliminates the gathering of information and reduces the time required to correlate and use the information to remove bottlenecks. Good management can achieve substantial productivity gains.

The ability of control equipment to fulfill these requirements has depended on the major advances that have taken place in the fields of integrated electronics, microprocessors and data communications.

The four devices that have made the most significant impact on how plants are controlled are:

- Distributed control system (DCS)

- Programmable logic controllers (PLCs)

- Smart instruments (SIs)

- PCs

1.8 Distributed control systems (DCSs)

A DCS is hardware and software based digital process control and data acquisition based system. The DCS is based on a data highway and has a modular, distributed, but integrated architecture. Each module performs a specific dedicated task such as the operator interface/analog or loop control/digital control. There is normally an interface unit situated on the data highway allowing easy connection to other devices such as PLCs and supervisory computer devices.

1.9 Programmable logic controllers (PLCs)

PLCs were developed in the late sixties to replace collections of electromagnetic relays, particularly in the automobile manufacturing industry. They were primarily used for sequence control and interlocking with racks of on/off inputs and outputs, called digital I/O. They are controlled by a central processor using easily written ‘ladderlogic’ type programs. Modern PLCs now include analog and digital I/O modules as well as sophisticated programming capabilities similar to a DCS e.g. PID loop programming. High speed inter-PLC links are also available, such as 10 and 100 Mbps Ethernet. A diagram of a typical PLC system is given in Figure 1.4.

A typical PLC system

1.10 Impact of the microprocessor

The microprocessor has had an enormous impact on instrumentation and control systems. Historically, an instrument had a single dedicated function. Controllers were localized and, although commonly computerized, they were designed for a specific purpose.

It has become apparent that a microprocessor, as a general-purpose device, can replace localized and highly site-specific controllers. Centralized microprocessors, which can analyze and display data as well as calculate and transmit control signals, are capable of greater efficiency, productivity, and quality gains.

Currently, a microprocessor connected directly to sensors and a controller, requires an interface card. This implements the hardware layer of the protocol stack and in conjunction with appropriate software, allows the microprocessor to communicate with other devices in the system. There are many instrumentation and control software and hardware packages; some are designed for particular proprietary systems and others are more general-purpose. Interface hardware and software now available for microprocessors cover virtually all the communications requirements for instrumentation and control.

As a microprocessor is relatively cheap, it can be upgraded as newer and faster models become available, thus improving the performance of the instrumentation and control system.

1.11 Smart instrumentation systems

In the 1960s, the 4–20 mA analog interface was established as the de facto standard for instrumentation technology. As a result, the manufacturers of instrumentation equipment had a standard communication interface on which to base their products. Users had a choice of instruments and sensors, from a wide range of suppliers, which could be integrated into their control systems.

With the advent of microprocessors and the development of digital technology, the situation has changed. Most users appreciate the many advantages of digital instruments. These include more information being displayed on a single instrument, local and remote display, reliability, economy, self tuning, and diagnostic capability. There is a gradual shift from analog to digital technology.

There are a number of intelligent digital sensors, with digital communications, capability for most traditional applications. These include sensors for measuring temperature, pressure, levels, flow, mass (weight), density, and power system parameters. These new intelligent digital sensors are known as ‘smart’ instrumentation.

The main features that define a ‘smart’ instrument are:

- Intelligent, digital sensors

- Digital data communications capability

- Ability to be multidropped with other devices

There is also an emerging range of intelligent, communicating, digital devices that could be called ‘smart’ actuators. Examples of these are devices such as variable speed drives, soft starters, protection relays, and switchgear control with digital communication facilities.

Graphical representation of data communications

The aim of this chapter is to lay the groundwork for the more detailed information presented in the following chapters.

Objectives

When you have completed study of this chapter you will be able to:

- Explain the basics of the binary numbering system – bits, bytes and characters

- Describe the factors that affect transmission speed:

- – Bandwidth

- – Signal-to-noise ratio

- – Data throughput

- – Error rate

- Explain the basic components of a communication system

- Describe the three communication modes

- Describe the message format and error detection in asynchronous communication systems

- List and explain the most common data codes:

- – Baudot

- – ASCII

- – EBCDIC

- – 4-bit binary code

- – Gray code

- – Binary coded decimal (BCD)

- Describe the message format and error detection in synchronous communication systems

- Describe the universal asynchronous transmitter/receiver

2.1 Bits, bytes and characters

A computer uses the binary numbering system, which has only two digits, 0 and 1. Any number can be represented by a string of these digits, known as bits (from binary digit). For example, the decimal number 5 is equal to the binary number 101.

Different sets of bits

As a bit can have only two values, it can be represented by a voltage that is either on (1) or off (0). This is also known as logical 1 and logical 0. Typical values used in a computer are 0 V for logical 0 and +5 V for logical 1, although it could also be the other way around i.e. 0 V for 1 and +5 V for 0.

A string of eight bits is called a ‘byte’ (or octet), and can have values ranging from 0 (0000 0000) to 25510 (1111 11112). Computers generally manipulate data in bytes or multiples of bytes.

The hexadecimal table

Programmers use ‘hexadecimal’ notation because it is a more convenient way of defining and dealing with bytes. In the hexadecimal numbering system, there are 16 digits (0–9 and A–F) each of which is represented by four bits. A byte is therefore represented by two hexadecimal digits.

A ‘character’ is a symbol that can be printed. The alphabet, both upper and lower case, numerals, punctuation marks and symbols such as ‘*’ and ‘&’ are all characters. A computer needs to express these characters in such a way that they can be understood by other computers and devices. The most common code for achieving this is the American Standard Code for Information Interchange (ASCII) described in section 2.8.

2.2 Communication principles

Every data communications system requires:

- A source of data (a transmitter or line driver), which converts the information into a form suitable for transmission over a link

- A receiver that accepts the signal and converts it back into the original data

- A communications link that transports the signals. This can be copper wire, optical fiber, and radio or satellite link

In addition, the transmitter and receiver must be able to understand each other. This requires agreement on a number of factors. The most important are:

- The type of signaling used

- Defining a logical ‘1’ and a logical ‘0’

- The codes that represent the symbols

- Maintaining synchronization between transmitter and receiver

- How the flow of data is controlled, so that the receiver is not swamped

- How to detect and correct transmission errors

The physical factors are referred to as the ‘interface standard’; the other factors comprise the ‘protocols’.

The physical method of transferring data across a communication link varies according to the medium used. The binary values 0 and 1, for example, can be signaled by the presence or absence of a voltage on a copper wire, by a pair of audio tones generated and decoded by a modem in the case of the telephone system, or by the use of modulated light in the case of optical fiber.

2.3 Communication modes

In any communications link connecting two devices, data can be sent in one of three communication modes. These are:

- Simplex

- Half duplex

- Full duplex

A simplex system is one that is designed for sending messages in one direction only. This is illustrated in Figure 2.1.

Simplex communications

A duplex system is designed for sending messages in both directions.

Half duplex occurs when data can flow in both directions, but in only one direction at a time (Figure 2.2).

Half-duplex communications

In a full-duplex system, the data can flow in both directions simultaneously (Figure 2.3).

Full duplex communications

2.4 Asynchronous systems

An asynchronous system is one in which each character or byte is sent within a frame. The receiver does not start detection until it receives the first bit, known as the ‘start bit’. The start bit is in the opposite voltage state to the idle voltage and allows the receiver to synchronize to the transmitter for the following data in the frame.

The receiver reads in the individual bits of the frame as they arrive, seeing either the logic 0 voltage or the logic 1 voltage at the appropriate time. The ‘clock’ rate at each end must be the same so that the receiver looks for each bit at the time the transmitter sends it. However, as the clocks are synchronized at the start of each frame, some variation can be tolerated at lower transmission speeds. The allowable variation decreases as data transmission rates increase, and asynchronous communication can have problems at high speeds (above 100 kbps).

Message format

An asynchronous frame may have the following format:

| Start bit: | Signals the start of the frame |

| Data: | Usually 7 or 8 bits of data, but can be 5 or 6 bits |

| Parity bit: | Optional error detection bit |

| Stop bit(s): | Usually 1, 1.5 or 2 bits. A value of 1.5 means that the level is held for 1.5 times as long as for a single bit |

Asynchronous frame format

An asynchronous frame format is shown in Figure 2.4. The transmitter and receiver must be set to exactly the same configuration so that the data can be correctly extracted from the frame. As each character has its own frame, the actual data transmission speed is less than the bit rate. For example, with a start bit, seven data bits, one parity bit and one stop bit, there are ten bits needed to send seven bits of data. Thus the transmission of useful data is 70% of the overall bit rate.

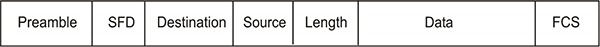

2.5 Synchronous systems

In synchronous systems, the receiver initially synchronizes to the transmitter’s clock pulses, which are incorporated in the transmitted data stream. This enables the receiver to maintain its synchronization throughout large messages, which could typically be up to 4500 bytes (36 000 bits). This allows large frames to be transmitted efficiently at high data rates. The synchronous system packs many characters together and sends them as a continuous stream, called a packet or a frame.

Message format

A typical synchronous system frame format is shown below in Figure 2.5.

Typical synchronous system frame format

| Preamble: | This comprises one or more bytes that allow the receiving unit to synchronize with the frame. |

| SFD: | The start of frame delimiter signals the beginning of the frame. |

| Destination: | The address to which the frame is sent. |

| Source: | The address from which the frame originated. |

| Length: | The number of bytes in the data field. |

| Data: | The actual message. |

| FCS: | The frame check sequence is for error detection. |

Each of these is called a field.

2.6 Error detection

All practical data communications channels are subject to noise, particularly copper cables in industrial environments with high electrical noise. Refer to Chapter 6 for a separate discussion on noise. Noise can result in incorrect reception of the data.

The basic principle of error detection is for the transmitter to compute a check character based on the original message content. This is sent to the receiver on the end of the message and the receiver repeats the same calculation on the bits it receives. If the computed check character does not match the one sent, we assume an error has occurred. The various methods of error detection are covered in Chapter 4.

The simplest form of error checking in asynchronous systems is to incorporate a parity bit, which may be even or odd.

Even parity requires the total number of data bits at logic 1 plus the parity bit to equal an even number. The communications hardware at the transmission end calculates the parity required and sets the parity bit to give an even number of logic 1 bits.

Odd parity works in the same way as even parity, except that the parity bit is adjusted so that the total number of logic 1 bits, including the parity bit, equals an odd number.

The hardware at the receiving end determines the total number of logic 1 bits and reports an error if it is not an appropriate even or odd number. The receiver hardware also detects receiver overruns and frame errors.

Statistically, use of a parity bit has only about a 50% chance of detecting an error on a high speed system. This method can detect an odd number of bits in error and will not detect an even number of bits in error. The parity bit is normally omitted if there are more sophisticated error checking schemes in place.

2.7 Transmission characteristics

Signaling rate (or baud rate)

The signaling rate of a communications link is a measure of how many times the physical signal changes per second and is expressed as the baud rate. An oscilloscope trace of the data transfer would show pulses at the baud rate. For a 1000 baud rate, pulses would be seen at multiples of 1 ms.

With asynchronous systems, we set the baud rate at both ends of the link so that each physical pulse has the same duration.

Data rate

The data rate or bit rate is expressed in bits per second (bps), or multiples such as kbps, Mbps and Gbps (kilo, mega and gigabits per second). This represents the actual number of data bits transferred per second. An example is a 1000 baud RS-232 link transferring a frame of 10 bits, being 7 data bits plus a start, stop and parity bit. Here the baud rate is 1000 baud, but the data rate is 700 bps.

Although there is a tendency to confuse baud rate and bit rate, they are not the same. Whereas baud rate indicates the number of signal changes per second, the bit rate indicates the number of bits represented by each signal change. In simple baseband systems such as RS-232, the baud rate equals the bit rate. For synchronous systems, the bit rate invariably exceeds the baud rate. For ALL systems, the data rate is less than the bit rate due to overheads such as stop, stand, and parity bits (synchronous systems) or fields such as address and error detection fields in synchronous system frames.

There are sophisticated modulation techniques, used particularly in modems that allow more than one bit to be encoded within a signal change. The ITU V.22bis full duplex standard, for example, defines a technique called quadrature amplitude modulation, which effectively increases a baud rate of 600 to a data rate of 2400 bps. Irrespective of the methods used, the maximum data rate is always limited by the bandwidth of the link. These modulation techniques used with modems are discussed in Chapter 7.

Bandwidth

The single most important factor that limits communication speeds is the bandwidth of the link. Bandwidth is generally expressed in hertz (Hz), meaning cycles per second. This represents the maximum frequency at which signal changes can be handled before attenuation degrades the message. Bandwidth is closely related to the transmission medium, ranging from around 5000 Hz for the public telephone system to the GHz range for optical fiber cable.

As a signal tends to attenuate over distance, communications links may require repeaters placed at intervals along the link, to boost the signal level.

Calculation of the theoretical maximum data transfer rate uses the Nyquist formula and involves the bandwidth and the number of levels encoded in each signaling element, as described in Chapter 4.

Signal to noise ratio

The signal to noise (S/N) ratio of a communications link is another important limiting factor. Sources of noise may be external or internal, as discussed in Chapter 6.

The maximum practical data transfer rate for a link is mathematically related to the bandwidth, S/N ratio and the number of levels encoded in each signaling element. As the S/N decreases, so does the bit rate. See Chapter 4 for a definition of the Shannon-Hartley Law that gives the relationships.

Data throughput

As data is always carried within a protocol envelope, ranging from a character frame to sophisticated message schemes, the data transfer rate will be less than the bit rate. As explained in Chapter 9, the amount of redundant data around a message packet increases as it passes down the protocol stack in a network. This means that the ratio of non-message data to ‘real’ information may be a significant factor in determining the effective transmission rate, sometimes referred to as the throughput.

Error rate

Error rate is related to factors such as S/N ratio, noise, and interference. There is generally a compromise between transmission speed and the allowable error rate, depending on the type of application. Ordinarily, an industrial control system cannot allow errors and is designed for maximum reliability of data transmission. This means that an industrial system will be comparatively slow in data transmission terms. As data transmission rates increase, there is a point at which the number of errors becomes excessive. Protocols handle this by requesting a retransmission of packets. Obviously, the number of retransmissions will eventually reach the point at which a high apparent data rate actually gives a lower real message rate, because much of the time is being used for retransmission.

2.8 Data coding

An agreed standard code allows a receiver to understand the messages sent by a transmitter. The number of bits in the code determines the maximum number of unique characters or symbols that can be represented. The most common codes are described on the following pages.

Baudot code

Although not in use much today, the Baudot code is of historical importance. It was invented in 1874 by Maurice Emile Baudot and is considered to be the first uniform-length code. Having five bits, it can represent 32 (25) characters and is suitable for use in a system requiring only letters and a few punctuation and control codes. The main use of this code was in early teleprinter machines.

A modified version of the Baudot code was adopted by the ITU as the standard for telegraph communications. This uses two ‘shift’ characters for letters and numbers and was the forerunner for the modern ASCII and EBCDIC codes.

ASCII code

The most common character set in the western world is the American Standard Code for Information Interchange, or ASCII (see Table 2.3).

This code uses a 7-bit string giving 128 (27) characters, consisting of:

- Upper and lower case letters

- Numerals 0 to 9

- Punctuation marks and symbols

- A set of control codes, consisting of the first 32 characters, which are used by the

- Communications link itself and are not printable

For example: D = ASCII code in binary 1000100.

A communications link setup for 7-bit data strings can only handle hexadecimal values from 00 to 7F. For full hexadecimal data transfer, an 8-bit link is needed, with each packet of data consisting of a byte (two hexadecimal digits) in the range 00 to FF. An 8-bit link is often referred to as ‘transparent’ because it can transmit any value. In such a link, a character can still be interpreted as an ASCII value if required, in which case the eighth bit is ignored.

The full hexadecimal range can be transmitted over a 7-bit link by representing each hexadecimal digit as its ASCII equivalent. Thus the hexadecimal number 8E would be represented as the two ASCII values 38 45 (hexadecimal) (‘8’ ‘E’). The disadvantage of this technique is that the amount of data to be transferred is almost doubled, and extra processing is required at each end.

ASCII control codes can be accessed directly from a PC keyboard by pressing the Control key [Ctrl] together with another key. For example, Control-A (^A) generates the ASCII code start of header (SOH).

The ASCII Code is the most common code used for encoding characters for data communications. It is a 7-bit code and, consequently, there are only 27 = 128 possible combinations of the seven binary digits (bits), ranging from binary 0000000 to 1111111 or hexadecimal 00 to 7F.

Each of these 128 codes is assigned to specific control codes or characters as specified by the following standards:

- ANSI-X3.4

- ISO-646

- ITU alphabet #5

The ASCII Table is the reference table used to record the bit value of every character defined by the code. There are many different forms of the table, but all contain the same basic information according to the standards. Two types are shown here.

Table 2.3 shows the condensed form of the ASCII Table, where all the characters and control codes are presented on one page. This table shows the code for each character in hexadecimal (HEX) and binary digits (BIN) values. Sometimes the decimal (DEC) values are also given in small numbers in each box.

This table works like a matrix, where the MSB (most significant bits – the digits on the left-hand side of the written HEX or BIN codes) are along the top of the table and the LSB (least significant bits – the digits on the right-hand side of the written HEX or BIN codes) are down the left-hand side of the table. Some examples of the HEX and BIN values are given below:

Table 2.4 and Table 2.5 show the form commonly used in printer manuals, sometimes also called the ASCII Code Conversion Table, where each ASCII character or control code is cross referenced to:

- BIN : A 7-bit binary ASCII code

- DEC : An equivalent 3 digit decimal value (0 to 127)

- HEX : An equivalent 2 digit hexadecimal value (00 to 7F)

The ASCII table

ASCII code conversion table

ASCII code conversion table

Table 2.6

Table of control codes for the ASCII

| Character | Control | 7-Bit Binary Code | Hex | Decimal | |

|---|---|---|---|---|---|

| NUL | Null | ^@ | 000 0000 | 00 | 0 |

| SOH | Start of Header | ^A | 000 0001 | 01 | 1 |

| STX | Start of Text | ^B | 000 0010 | 02 | 2 |

| ETX | End of Text | ^C | 000 0011 | 03 | 3 |

| EOT | End of Transmission | ^D | 000 0100 | 04 | 4 |

| ENQ | Enquiry | ^E | 000 0101 | 05 | 5 |

| ACK | Acknowledge | ^F | 000 0110 | 06 | 6 |

| BEL | Bell | ^G | 000 0111 | 07 | 7 |

| BS | Backspace | ^H | 000 1000 | 08 | 8 |

| HT | Horizontal Tabulation | ^I | 000 1001 | 09 | 9 |

| LF | Line feed | ^J | 000 1010 | 0A | 10 |

| VT | Vertical Tabulation | ^K | 000 1011 | 0B | 11 |

| FF | Form Feed | ^L | 000 1100 | 0C | 12 |

| CR | Carriage return | ^M | 000 1101 | 0D | 13 |

| SO | Shift Out | ^N | 000 1110 | 0E | 14 |

| SI | Shift In | ^O | 000 1111 | 0F | 15 |

| DLE | Data Link Escape | ^P | 001 0000 | 10 | 16 |

| DC1 | Device Control 1 | ^Q | 001 0001 | 11 | 17 |

| DC2 | Device Control 2 | ^R | 001 0010 | 12 | 18 |

| DC3 | Device Control 3 | ^S | 001 0011 | 13 | 19 |

| DC4 | Device Control 4 | ^T | 001 0100 | 14 | 20 |

| NAK | Negative Acknowledgement | ^U | 001 0101 | 15 | 21 |

| SYN | Synchronous Idle | ^V | 001 0110 | 16 | 22 |

| ETB | End of Trans Block | ^W | 001 0111 | 17 | 23 |

| CAN | Cancel | ^X | 001 1000 | 18 | 234 |

| EM | End of Medium | ^Y | 001 1001 | 19 | 25 |

| SUB | Substitute | ^Z | 001 1010 | 1A | 26 |

| ESC | Escape | ^[ | 001 1011 | 1B | 27 |

| FS | File Separator | ^\ | 001 1100 | 1C | 28 |

| GS | Group Separator | ^] | 001 1101 | 1D | 29 |

| RS | Record Separator | ^| | 001 1110 | 1E | 30 |

| US | Unit Separator | ^_ | 001 1111 | 1F | 31 |

| DEL | Delete, Rubout | 111 1111 | 7F | 127 | |

Least significant bits

Table 2.7

EBCDIC code table

| Bit positions | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ||

| 3 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | |||

| 2 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 1 | |||

| 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | |||

| 8 | 7 | 6 | 5 | ||||||||||||||||

| 0 | 0 | 0 | 0 | NUL | SOH | STX | ETX | PF | HT | LC | DEL | SMM | VT | FF | CR | SO | SI | ||

| 0 | 0 | 0 | 1 | DLE | DC1 | DC2 | DC3 | RES | NL | BS | IL | CAN | EM | CC | IFS | IGS | IRS | IUS | |

| 0 | 0 | 1 | 0 | DS | SOS | FS | BYP | LF | EOB | PRE | SM | ENQ | ACK | BEL | |||||

| 0 | 0 | 1 | 1 | SYN | PN | RS | UC | EOT | DC4 | NAK | SUB | ||||||||

| 0 | 1 | 0 | 0 | SP | ¢ | . | < | ( | + | | | |||||||||

| 0 | 1 | 0 | 1 | & | ! | $ | * | ) | ; | _ | |||||||||

| 0 | 1 | 1 | 0 | – | / | ‘ | % | – | > | ? | |||||||||

| 0 | 1 | 1 | 1 | : | # | @ | ‘ | = | “ | ||||||||||

| 1 | 0 | 0 | 0 | a | b | c | d | e | f | g | h | i | |||||||

| 1 | 0 | 0 | 1 | j | k | l | m | n | o | p | q | r | |||||||

| 1 | 0 | 1 | 0 | s | t | u | v | w | x | y | z | ||||||||

| 1 | 0 | 1 | 1 | ||||||||||||||||

| 1 | 1 | 0 | 0 | A | B | C | D | E | F | G | H | I | |||||||

| 1 | 1 | 0 | 1 | J | K | L | M | N | O | P | Q | R | |||||||

| 1 | 1 | 1 | 0 | S | T | U | V | W | X | Y | Z | ||||||||

| 1 | 1 | 1 | 1 | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ||||||

Most significant bits

Control codes are often difficult to detect when troubleshooting a data system, unlike printable codes, which show up as a symbol on the printer or terminal. Digital line analyzers can be used to detect and display the unique code for each of these control codes to assist in the analysis of the system.

To represent the word DATA in binary form using the 7-bit ASCII code, each letter is coded as follows:

| Binary | Hex | ||

|---|---|---|---|

| D | : | 100 0100 | 44 |

| A | : | 100 0001 | 41 |

| T | : | 101 0100 | 54 |

| A | : | 100 0001 | 41 |

Referring to the ASCII table, the binary digits on the right-hand side of the binary column change by one digit for each step down the table. Consequently, the bit on the far right has become known as least significant bit (LSB) because it changes the overall value so little. The bit on the far left has become known as most significant bit (MSB) because it changes the overall value so much.

According to the reading conventions in the western world, words and sentences are read from left to right. When looking at the ASCII code for a character, we would read the MSB (most significant bit) first, which is on the left-hand side. However, in data communications, the convention is to transmit the LSB of each character FIRST, which is on the right-hand side and the MSB last. However, the characters are still usually sent in the conventional reading sequence in which they are generated. For example, if the word D-A-T-A is to be transmitted, the characters are transferred in that sequence, but the 7 bit ASCII code for each character is ‘reversed’.

Consequently, the bit pattern that is observed on the communication link will be as follows, reading each bit in order from right to left.

Adding the stop bit (1) and parity bit (1 or 0) and the start bit (0) to the ASCII character, the pattern indicated above is developed with even parity. For example, an ASCII ‘A’ character is sent as:

EBCDIC

Extended binary coded data interchange code (EBCDIC), originally developed by IBM, uses 8 bits to represent each character. EBCDIC is similar in concept to the ASCII code, but specific bit patterns are different and it is incompatible with ASCII. When IBM introduced its personal computer range, they decided to adopt the ASCII Code, so EBCDIC does not have much relevance to data communications in the industrial environment. Refer to the EBCDIC Table 2.7.

4-bit binary code

For purely numerical data a 4-bit binary code, giving 16 characters (24), is sometimes used. The numbers 0–9 are represented by the binary codes 0000 to 1001 and the remaining codes are used for decimal points. This increases transmission speed or reduces the number of connections in simple systems. The 4-bit binary code is shown in Table 2.8.

4-bit binary code

Gray code

Binary code is not ideal for some types of devices because multiple digits have to change every alternate count as the code increments. For incremental devices, such as shaft position encoders, which give a code output of shaft positions, the Gray code can be used. The advantage of this code over binary is that only one bit changes every time the value is incremented. This reduces the ambiguity in measuring consecutive angular positions. The Gray code is shown in Table 2.9.

Gray code

Binary coded decimal

Binary coded decimal (BCD) is an extension of the 4-bit binary code. BCD encoding converts each separate digit of a decimal number into a 4-bit binary code. Consequently, the BCD uses 4 bits to represent one decimal digit. Although 4 bits in the binary code can represent 16 numbers (from 0 to 15) only the first 10 of these, from 0 to 9, are valid for BCD.

Comparison of Binary, Gray and BCD codes

BCD is commonly used on relatively simple systems such as small instruments, thumbwheels, and digital panel meters. Special interface cards and integrated circuits (ICs) are available for connecting BCD components to other intelligent devices. They can be connected directly to the inputs and outputs of PLCs.

A typical application for BCD is the setting of a parameter on a control panel from a group of thumbwheels. Each thumbwheel represents a decimal digit (from left to right; thousands, hundreds, tens and units digits). The interface connection of each digit to a PLC requires 4 wires plus a common, which would mean a total of 20 wires for a 4-digit set of thumbwheels. The number of wires, and their connections to a PLC, can be reduced to 8 by using a time division multiplexing system as shown in Figure 2.6. Each PLC output is energized in turn, and the binary code is measured by the PLC at four inputs. A similar arrangement is used in reverse for the digital display on a panel meter, using a group of four 7-segment LCD or LED displays.

BCD Thumbwheel switches and connections to PLC

2.9 The universal asynchronous receiver/transmitter (UART)

The start, stop and parity bits used in asynchronous transmission systems are usually physically generated by a standard integrated circuit (IC) chip that is part of the interface circuitry between the microprocessor bus and the line driver (or receiver) of the communications link. This type of IC is called a UART (universal asynchronous receiver/transmitter) or sometimes an ACE (asynchronous communications element).

Various forms of UART are also used in synchronous data communications, called USRT. Collectively, these are all called USARTs. The outputs of a UART are not designed to interface directly with the communications link. Additional devices, called line drivers and line receivers, are necessary to give out and receive the voltages appropriate to the communications link.

8250, 16450, 16550 are examples of UARTs, and 8251 is an example of a USART.

Typical connection details of the UART

The main purpose of the UART is to look after all the routine ‘housekeeping’ matters associated with preparing the 8 bit parallel output of a microprocessor for asynchronous serial data communication. The timing pulses are derived from the microprocessor master clock through external connections.

When transmitting, the UART:

- Sets the baud rate

- Accepts character bits from microprocessor as a parallel group

- Generates a start bit

- Adds the data bits in a serial group

- Determines the parity and adds a parity bit (if required)

- Ends transmission with a stop bit (sometimes 2 stop bits)

- Then signals the microprocessor that it is ready for the next character

- Coordinates handshaking when required

The UART has a separate signal line for transmit (TX) and one for receive (RX) so that it can operate in the full-duplex or a half-duplex mode. Other connections on the UART provide hardware signals for handshaking, the method of providing some form of ‘interlocking’ between two devices at the ends of a data communications link. Handshaking is discussed in more detail in Chapter 3.

When receiving, the UART:

- Sets the baud rate at the receiver

- Recognizes the start bit

- Reads the data bits in a serial group

- Reads the parity bit and checks the parity

- Recognizes the stop bit(s)

- Transfers the character as a parallel group to the microprocessor for further processing

- Coordinates handshaking when required

- Checks for data errors and flags the error bit in the status register

This removes the burden of programming the above routines in the microprocessor and, instead, they are handled transparently by the UART. All the program does with serial data is to simply write/read bytes to/from the UART.

The UART transmitter

A byte received from the microprocessor for transmission is written to the I/O address of the UART’s transmission sector. The bits to be transmitted are loaded into a shift register, then shifted out on the negative transition of the transmit data clock. This pulse rate sets the baud rate. When all the bits have been shifted out of the transmitter’s shift register, the next packet is loaded and the process is repeated. The word ‘packet’ is used to indicate start, data, parity and stop bits all packaged together. Some authors refer to the packet as a serial data unit (SDU).

The UART transmitter

Between the transmitter holding register and the shift register is a section called the SDU (serial data unit) formation. This section constructs the actual packet to be loaded into the shift register.

In full duplex communications, the software needs to only test the value of the transmitter buffer empty (TBE) flag to decide whether to write a byte to the UART. In half-duplex communications, the modem must swap between transmitter and receiver states. Hence, the software must check both the transmitter buffer and the transmitter’s shift register, as there may still be some data there.

The UART receiver